Apple Child Safety & CSAM Detection — Truth, lies, and technical details - TechnW3

Apple's new child safety features and the ensuing confusion, controversy, and consternation over them… it's a lot. But, the right to privacy vs. the exploitation and abuse of children is about as hard as it gets. Which is why you will find InfoSec people for and against this. Child advocacy people for and against this. And yes, even Apple internal people for and against this.

There are also critical moral, ethical, and philosophical arguments, regardless of any and all of the technology involved. But a lot of the arguments are currently being predicated on the technology. And they're getting it wrong. Unintentionally or deliberately. Tactically or callously. People keep getting the basic technology… wrong. So, I'm going to explain just exactly how these new child safety features work and answer as many of your questions about them as I possibly can so that when it comes to those critical moral, ethical, and philosophical arguments, you have the best information possible so you can make the best decision possible for you and yours.

So, what are the new child safety features?

-

Siri and Search intermediation and interception, to help people… get help with the prevention of child exploitation and abuse materials, CSAM, and provide information and resources.

-

Communications Safety, to help disrupt grooming cycles and prevent child predation, the Messages app and parental controls are being updated to enable warnings for explicit images sent or received by and to minors over iMessage. And, for children 12 years old and under, the option for a parent or guardian to be notified if the child chooses to view the images anyway.

-

CSAM detection, to stop collections of CSAM being stored or trafficked through iCloud Photo Library on Apple's servers, the uploader is being updated to match and flag any such images on upload, using a fairly complex cryptographic system, which Apple believes better maintains user privacy compared to simply scanning the whole entire online photo libraries the way Google, Microsoft, Facebook, Dropbox, and others have been doing for upwards of a decade already.

When and where are these new features coming?

As part of iOS 15, iPadOS 15, watchOS 8, and macOS Monterey later this fall, but only in the U.S., the United States of America for now.

Only in the U.S.?

Yes, only in the U.S., but Apple has said they will consider adding more countries and regions on a case-by-case basis in the future. In keeping with local laws, and as Apple deems appropriate.

So, what are the objections to these new features?

-

That Apple's not our parent and shouldn't be intermediating or intervening Siri or Search queries for any reason, ever. That's between you and your search engine. But there's actually been very little pushback on this part beyond the purely philosophical at this point.

-

That the Communications Safety system might be misused by abusive parents to further control children, and may out non-hetero children exposing them to further abuse and abandonment

-

That while Apple intends for CSAM detection on-upload to be far more narrow in scope and more privacy-centric, the on-device matching aspect actually makes it more of a violation, and that the mere existence of the system creates the potential for misuse and abuse beyond CSAM, especially by authoritarian governments already trying to pressure their way in.

How does the new Siri and Search feature work?

If a user asks for help in reporting instances of child abuse and exploitation or of CSAM, they'll be pointed to resources for where and how to file those reports.

If a user tries to query Siri or Search for CSAM, the system will intervene, explain how the topic is harmful and problematic, and provide helpful resources from partners.

Are these queries reported to Apple or law enforcement?

No. They're built into the existing, secure, private Siri and Search system where no identifying information is provided to Apple about the accounts making the queries, and so there's nothing that can be forwarded to any law enforcement.

How does the Communications Safety feature work?

If a device is set up for a child, meaning it's using Apple's existing Family Sharing and Parental Control system, a parent or guardian can choose to enable Communication Safety. It's not enabled by default, it's opt-in.

At that point, the Messages app — not the iMessage service but the Messaging app, which might sound like a bullshit distinction but is actually an important technical one because it means it also applies to SMS/MMS green bubbles as well as blue — but at that point, the Messages App will pop up a warning any time the child device tries to send or view an image they received containing explicit sexual activity.

This is detected using on-device machine learning, basically computer vision, the same way the Photos app has let you search for cars or cats. In order to do that, it has to use machine learning, basically computer vision, to detect cars or cats in images.

This time it isn't being done in the Photos app, though, but in the Messages app. And it's being done on-device, with zero communication to or from Apple, because Apple wants zero knowledge of the images in your Messages app.

This is completely different from how the CSAM detection feature works, but I'll get to that in a minute.

If the device is set up for a child, and the Messages app detects the receipt of an explicit image, instead of rendering that image, it'll render a blurred version of the image and present a View Photo option in tiny text beneath it. If the child taps on that text, Messages will pop up a warning screen explaining the potential dangers and problems associated with receiving explicit images. It's done in very child-centric language, but basically that these images can be used for grooming by child predators and that the images could have been taken or shared without consent.

Optionally, parents or guardians can turn on notifications for children 12-and under, and only for children 12-and-under, as in it simply cannot be turned on for children 13 or over. If notifications are turned on, and the child taps on View Photo, and also taps through the first warning screen, a second warning screen is presented informing the child that if they tap to view the image again, their parents will be notified, but also that they don't have to view anything they don't want to, and a link to get help.

If the child does click view photo again, they'll get to see the photo, but a notification will be sent to the parent device that set up the child device. By no means to link the potential consequences or harm, but similar to how parents or guardians have the option to get notifications for child devices making in-app purchases.

Communication Safety works pretty much the same for sending images. There's a warning before the image is sent, and, if 12-or-under and enabled by the parent, a second warning that the parent will be notified if the image is sent, and then if the image is sent, the notification will be sent.

Doesn't this break end-to-end encryption?

No, not technically, though well-intentioned, knowledgable people can and will argue and disagree about the spirit and the principal involved.

The parental controls and warnings are all being done client-side in the Messages app. None of it is server-side in the iMessage service. That has the benefit of making it work with SMS/MMS or the green bubble as well as blue bubble images.

Child devices will throw warnings before and after images are sent or received, but those images are sent and received fully end-to-end encrypted through the service, just like always.

In terms of end-to-end encryption, Apple doesn't consider adding a warning about content before sending an image any different than adding a warning about file size over cellular data, or… I guess.. a sticker before sending an image. Or sending a notification from the client app of a 12-year-or-under child device to a parent device after receiving a message any different than using the client app to forward that message to the parent. In other words, the opt-in act of setting up the notification pre-or-post transit is the same type of explicit user action as forwarding pre-or-post transit.

And the transit itself remains 100% end-to-end encrypted.

Does this block the images or messages?

No. Communication safety has nothing to do with messages, only images. So no messages are ever blocked. And images are still sent and received as normal; Communication Safety only kicks in on the client side to warn and potentially notify about them.

Messages has had a block contact feature for a long time, though, and while that's totally separate from this, it can be used to stop any unwanted and unwelcome messages.

Is it really images only?

It's sexually explicit images only. Nothing other than sexually explicit images, not other types of images, not text, not links, not anything other than sexually explicit images will trigger the communication safety system, so… conversations, for example, wouldn't get a warning or optional notification.

Does Apple know when child devices are sending or receiving these images?

No. Apple set it up on-device because they don't want to know. Just like they've done face detection for search, and more recently, full computer vision for search on-device, for years, because Apple wants zero knowledge about the images on the device.

The basic warnings are between the child and their device. The optional notifications for 12-or-under child devices are between the child, their device, and the parent device. And that notification is sent end-to-end encrypted as well, so Apple has zero knowledge as to what the notification is about.

Are these images reported to law enforcement or anyone else

No. There's no reporting functionality beyond the parent device notification at all.

What safeguards are in place to prevent abuse?

It's really, really hard to talk about theoretical safeguards vs. real potential harm. If Communication Safety makes grooming and exploitation significantly harder through the Messages app but results in a number of children greater than zero being outed, maybe abused, and abandoned… it can be soul-crushing either way. So, I'm going to give you the information, and you can decide where you fall on that spectrum.

First, it has to be set up as a child device to begin with. It's not enabled by default, so the child device has to have opted in.

Second, it has to be separately enabled for notifications as well, which can only be done for a child device set up as 12-years-old-or-under.

Now, someone could change the age of a child account from 13-and-over to 12-and-under, but if the account has ever been set up as 12-or-under in the past, it's not possible to change it again for that same account.

Third, the child device is notified if and when notifications are turned on for the child device.

Fourth, it only applies to sexually explicit images, so other images, whole entire text conversations, emoji, none of that would trigger the system. So, a child in an abusive situation could still text for help, either over iMessage or SMS, without any warnings or notifications.

Fifth, the child has to tap View Photo or Send Photo, has to tap again through the first warning, and then has to tap a third time through the notification warning to trigger a notification to the parent device.

Of course, people ignore warnings all the time, and young kids typically have curiosity, even reckless curiosity, beyond their cognitive development, and don't always have parents or guardians with their wellbeing and welfare at heart.

And, for people who are concerned that the system will lead to outing, that's where the concern lies.

Is there any way to prevent the parental notification?

No, if the device is set up for a 12-year-or-under account, and the parent turns on notifications, if the child chooses to ignore the warnings and see the image, the notification will be sent.

Personally, I'd like to see Apple switch the notification to a block. That would greatly reduce, maybe even prevent any potential outings and be better aligned with how other parental content control options work.

Is Messages really even a concern about grooming and predators?

Yes. At least as much as any private instant or direct messaging system. While initial contact happens on public social and gaming networks, predators will escalate to DM and IM for real-time abuse.

And while WhatsApp and Messenger and Instagram, and other networks are more popular globally, in the U.S., where this feature is being rolled out, iMessage is also popular, and especially popular among kids and teens.

And since most if not all other services have been scanning for potentially abusive images for years already, Apple doesn't want to leave iMessage as an easy, safe haven for this activity. They want to disrupt grooming cycles and prevent child predation.

Can Communication Safety feature be enabled for non-child accounts, like to protect against dick pics?

No, Communication Safety is currently only available for accounts expressly created for children as part of a Family Sharing setup.

If the automatic blurring of unsolicited sexually explicit images is something you think should be more widely available, you can go to Apple.com/feedback or use the feature request… feature in bug reporter to let them know you're interested in more, but, at least for now, you'll need to use the block contact function in Messages… or the Allanah Pearce retaliation if that's more your style.

Will Apple be making Communication Safety available to third-party apps?

Potentially. Apple is releasing a new Screen Time API, so other apps can offer parental control features in a private, secure way. Currently, Communication Safety isn't part of it, but Apple sounds open to considering it.

What that means is that third-party apps would get access to the system that detects and blurs explicit images but would likely be able to implement their own control systems around it.

Why is Apple detecting CSAM?

In 2020, the National Center for Missing and Exploited Children, NCMEC, received over 21 million reports of abusive materials from online providers. Twenty million from Facebook, including Instagram, and WhatsApp, over 546 thousand from Google, over 144 thousand from Snapchat, 96 thousand from Microsoft, 65 thousand from Twitter, 31 thousand from Imagr, 22 thousand from TikTok, 20 thousand from Dropbox.

From Apple? 265. Not 265 thousand. 265. Period.

Because, unlike those other companies, Apple isn't scanning iCloud Photo Libraries, only some emails sent through iCloud. Because, unlike those other companies, Apple felt they shouldn't be looking at the full contents of anyone's iCloud Photo Library, even to detect something as universally rivaled and illegal as CSAM.

But they likewise didn't want to leave iCloud Photo Library as an easy, safe haven for this activity. And Apple didn't see this as a privacy problem so much as an engineering problem.

So, just like Apple was late to features like face detection and people search, and computer vision search and live text because they simply did not believe in or want to round trip every user image to and from their servers, or scan them in their online libraries, or operate on them directly in any way, Apple is late to CSAM detection for pretty much the same reasons.

In other words, Apple can no longer abide this material being stored on or trafficked through their servers, and aren't willing to scan whole user iCloud Photo Libraries to stop it, so to maintain as much user privacy as possible, at least in their minds, they've come up with this system instead, as convoluted, complicated, and confusing as it is.

How does CSAM detection work?

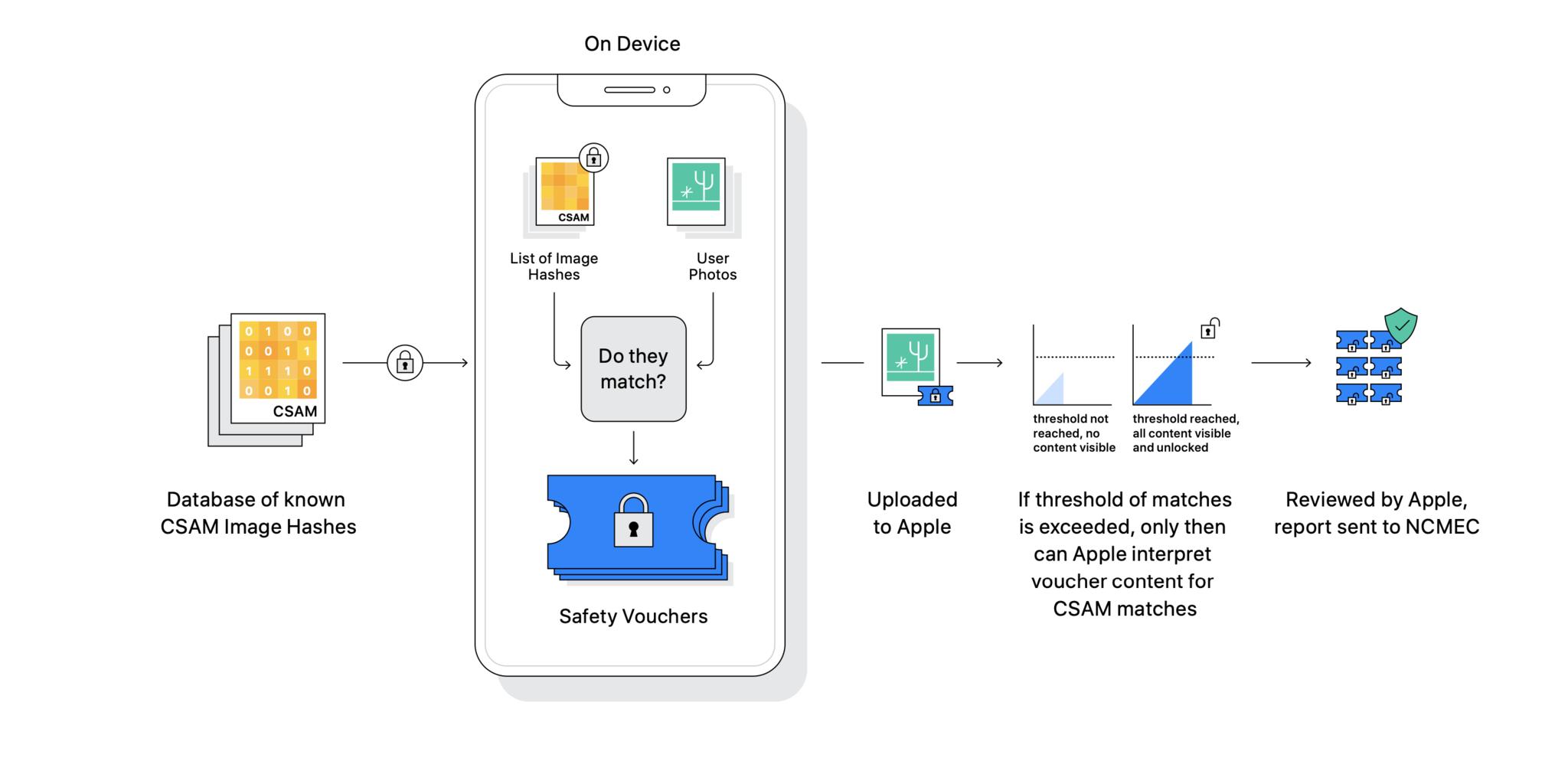

Ok, so, when iOS 15 and iPadOS 15 ship this fall in the U.S., and yes, only in the U.S. and only for iPhone and iPad for now, if you have iCloud Photo Library switched on in Settings, and yes, only if you have iCloud Photo Library switched on in Settings, Apple will start detecting for CSAM collections.

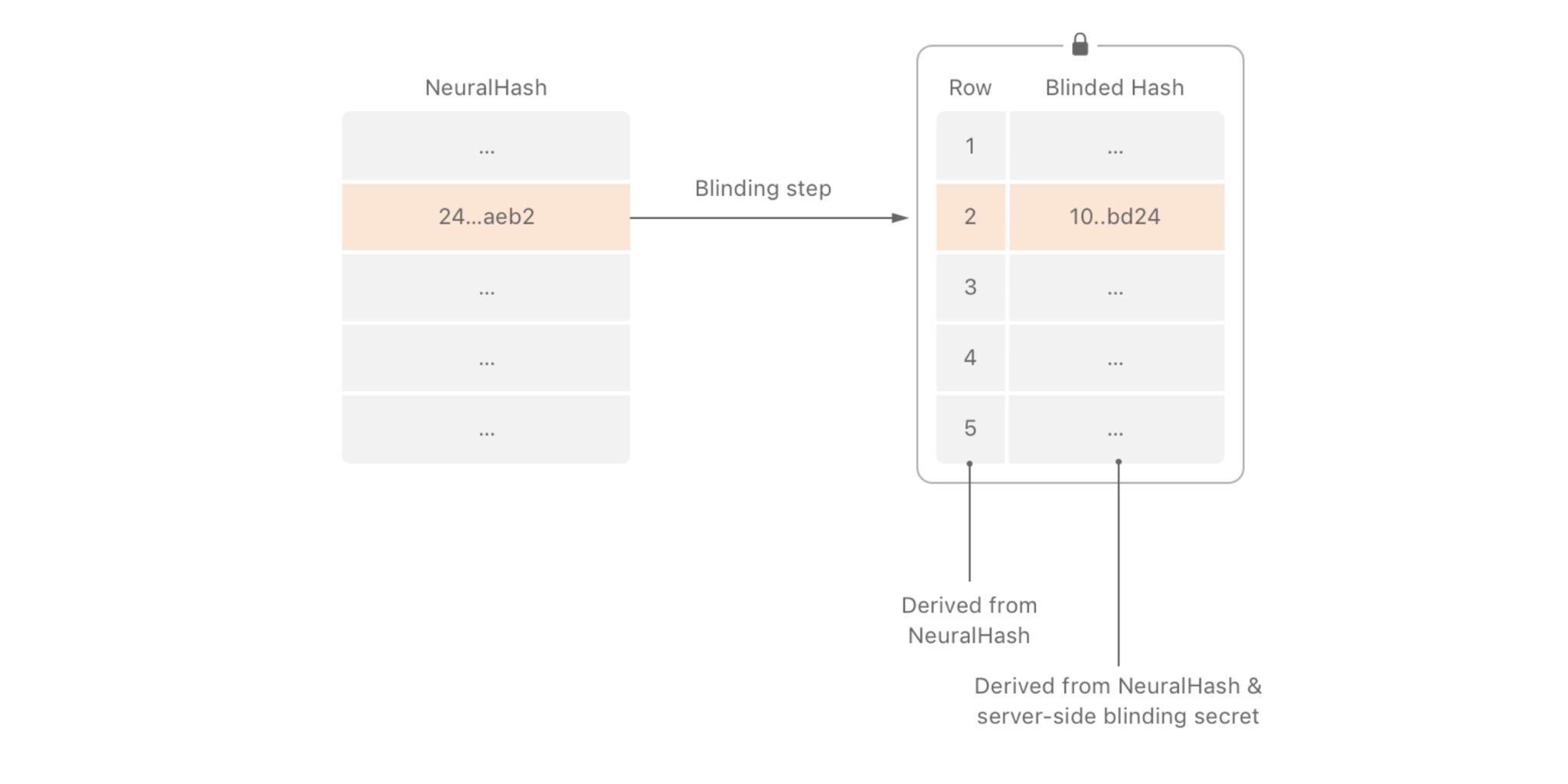

To do the detection, Apple is using a database of known CSAM image hashes provided by the National Center for Missing and Exploited Children, NCMEC, and other child safety organizations. Apple is taking this database of hashes and applying another set of transformations on top of them to make them unreadable. Which means they can't be reversed back to the original images. Not ever. And then storing that database of… re-hashes on-device. On our iPhones and iPads.

Not the images themselves. No one is putting CSAM on your iPhone or iPad. But the database of hashes is based on the hashes from the original database. And that includes a final elliptical curve blinding step, so there's absolutely no way to extract CSAM or figure out anything about the original database from what's stored on the device.

Think of the original hashes as a serial number derived from the images and the transformed hashes as an encrypted serial number. And there's no way to tell or infer what they represent.

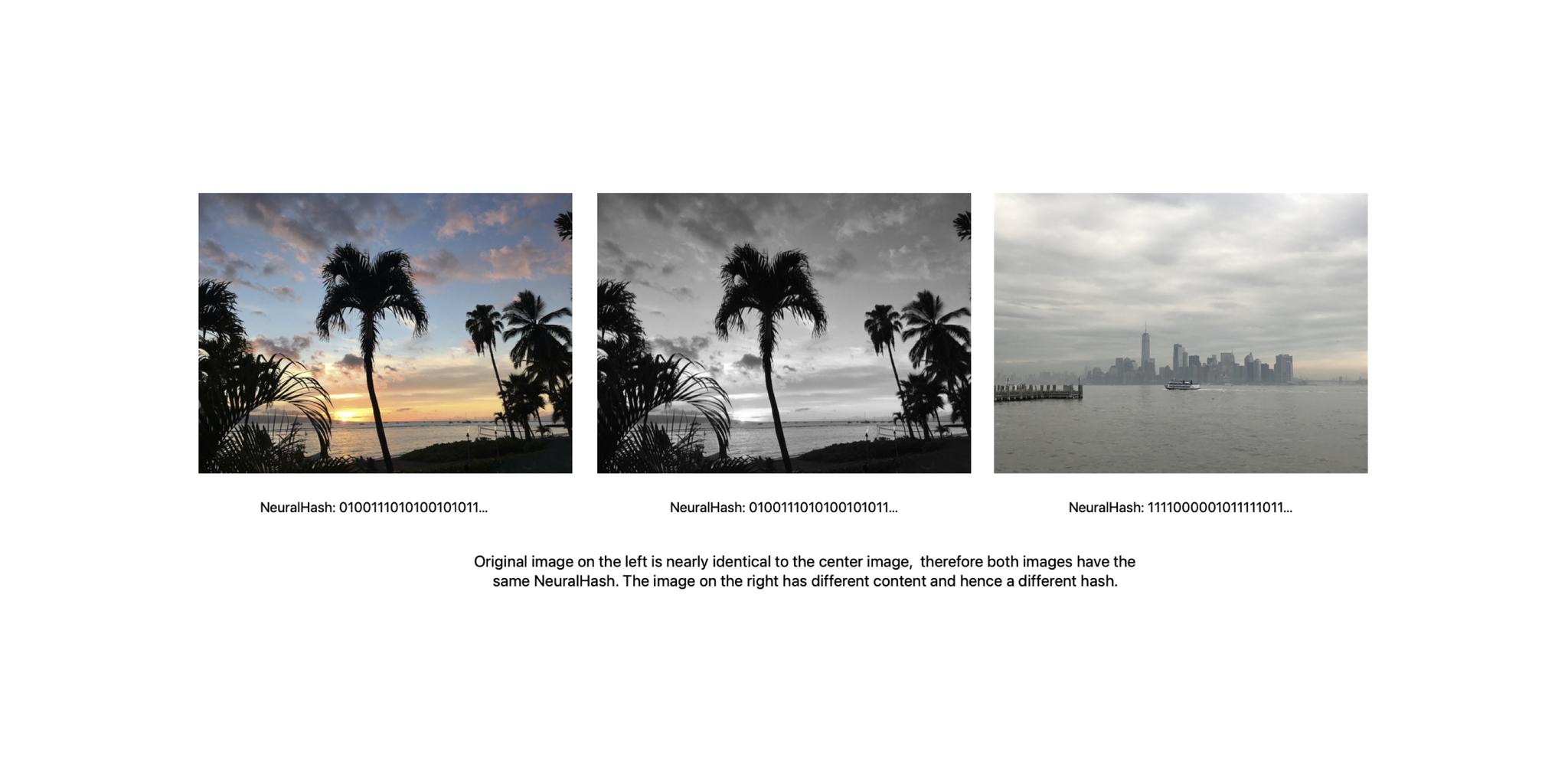

Then, when an image is being uploaded to iCloud Photo Library, and only when an image is being uploaded to iCloud Photo Library, Apple creates a hash for that image as well. A NeuralHash, which is Apple's version of a perceptual hashing function, is similar to Microsoft's PhotoDNA technology, which has been used for CSAM detection for over a decade.

Neither Apple nor Microsoft have or will document or share NeuralHash or PhotoDNA because they don't want anyone to get any help in defeating it, which really frustrates undisclosed researchers.

But the gist is this — Apple isn't scanning the pixels of your photos or identifying anything about them. They're not using any form of content detection, optical scanning, or computer vision like they do in the Photos app for search. They're not figuring out if there are any cars in your photos or cats, not even if there's anything explicit or illegal in them.

Apple doesn't want to know what the image is. So NeuralHash is based on the math of the image, and it's… convoluted. Literally, an embedded, self-supervised convolutional neural network that looks at things like angular distance or cosine similarity and makes sure the same image gets the same hash, and different images get different hashes.

What NeuralHash does do is allow for matches even if the images have been cropped, desaturated, resized, lossy encoded, or otherwise modified to avoid detection. Which is what people trafficking these images tend to do.

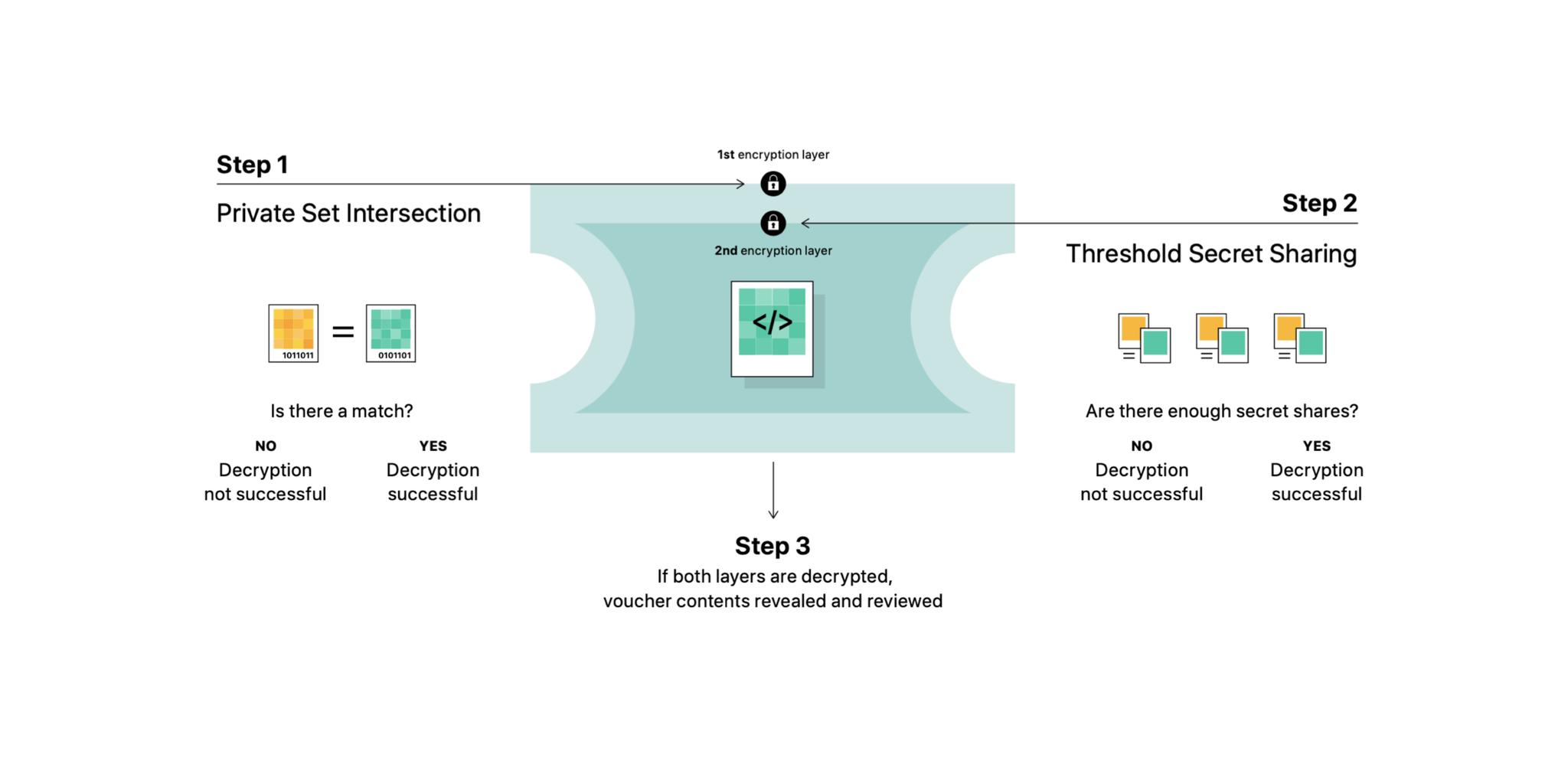

The matching process also uses a technology called Private Set Intersection, which compares the hashes of the images being uploaded to the hashes in the blinded database. But, because the database is blinded, the final step of the comparison has to be done on the iCloud server through cryptographic headers.

Think of it as an envelope. If there's no hash match, Apple can't even decrypt the header. They can't ever open the envelope and can't ever learn anything about the contents. It also makes sure the device doesn't know the results of a match because it's only completed on the server.

Inside the envelope is a cryptographic safety voucher encoded with the match result, the neural hash, and a visual derivative, and attached to the image as it's uploaded to iCloud Photo Library. And… that's it. That's all. Nothing else happens at that point.

Because, in part, in the rare instance there's a hash collision or false positive, it doesn't really matter. The system is only ever designed to detect collections.

To do that, Apple is using something called threshold secret sharing. This is a terrible way to think about it but think about it like this: There's a box that can be opened with any 20 out of 1000 secret words. You can't open it without 20 of the words, but it doesn't matter which 20 words you get. If you only get 19, no joy. If and when you get to 20 or more, bingo.

Apple can't tell what's in a single matched safety voucher. Only when the threshold is reached is that secret shared with Apple. So if the threshold is never reached, Apple will never know what's in any of the matched safety vouchers. Once the threshold is met, Apple can open all the matched safety vouchers.

Now, Apple won't say what the threshold is because they don't want any CSAM traffickers to deliberately stay just beneath the threshold but has said it's set high enough to ensure as high a degree of accuracy and prevent as many incorrect flags as possible. And that manual-as-in-human review is mandated to further reduce any possibility of incorrect flags. Apple says it's less than one in one trillion per year.

To make it even more complicated… and secure… to avoid Apple ever learning about the actual number of matches before reaching the threshold, the system will also periodically create synthetic match vouchers. These will pass the header check, the envelope, but not contribute towards the threshold, the ability to open any and all matched safety vouchers. So, what it does, is make it impossible for Apple to ever know for sure how many real matches exist because it'll be impossible to ever know for sure how many of them are synthetic.

So, if the hashes match, Apple can decrypt the header or open the envelope, and if and when they reach the threshold for number of real matches, then they can open the vouchers.

But, at that point, it triggers a manual-as-in-human review process. The reviewer checks each voucher to confirm there are matches, and if the matches are confirmed, at that point, and only at that point, Apple will disable the user's account and send a report to NCMEC. Yes, not to law enforcement, but to the NCMEC.

If even after the hash matching, the threshold, and the manual review, the user feels their account was flagged by mistake, they can file an appeal with Apple to get it reinstated.

So Apple just created the back door into iOS they swore never to create?

Apple, to the surprise of absolutely no one, says unequivocally it's not a back door and was expressly and purposely designed not to be a back door. It only triggers on upload and is a server-side function that requires device-side steps to function only in order to better preserve privacy, but it also requires the server-side steps in order to function at all.

That it was designed to prevent Apple from having to scan photo libraries on the server, which they see as being a far worse violation of privacy.

I'll get to why a lot of people see that device-side step as the much worse violation in a minute.

But Apple maintains that if anyone, including any government, thinks this is a back door or establishes the precedent for back doors on iOS, they'll explain why it's not true, in exacting technical detail, over and over again, as often as they need to.

Which, of course, may or may not matter to some governments, but more on that in a minute as well.

Doesn't Apple already scan iCloud Photo Library for CSAM?

No. They've been scanning some iCloud email for CSAM for a while now, but this is the first time they've done anything with iCloud Photo Library.

So Apple is using CSAM as an excuse to scan our photo libraries now?

No. That's the easy, typical way to do it. It's how most other tech companies have been doing it going on a decade now. And it would have been easier, maybe for everyone involved, if Apple just decided to do that. It would still have made headlines because Apple, and resulted in pushback because of Apple's promotion of privacy not just as a human right but as a competitive advantage. But since it's so the industry norm, that pushback might not have been as big a deal as what we're seeing now.

But, Apple wants nothing to do with scanning full user libraries on their servers because they want as close to zero knowledge as possible of our images stored even on their servers.

So, Apple engineered this complex, convoluted, confusing system to match hashes on-device, and only ever let Apple know about the matched safety vouchers, and only if a large enough collection of matching safety vouchers were ever uploaded to their server.

See, in Apple's mind, on-device means private. It's how they do face recognition for photo search, subject identification for photo search, suggested photo enhancements — all of which, by the way, involve actual photo scanning, not just hash matching, and have for years.

It's also how suggested apps work and how Live Text and even Siri voice-to-text will work come the fall so that Apple doesn't have to transmit our data and operate on it on their servers.

And, for the most part, everyone's been super happy with that approach because it doesn't violate our privacy in any way.

But when it comes to CSAM detection, even though it's only hash matching and not scanning any actual images, and only being done on upload to iCloud Photo Library, not local images, having it done on-device feels like a violation to some people. Because everything else, every other feature I just mentioned, is only ever being done for the user and is only ever returned to the user unless the user explicitly chooses to share it. In other words, what happens on the device stays on the device.

CSAM detection is being done not for the user but for exploited and abused children, and the results aren't only ever returned to the user — they're sent to Apple and can be forwarded on to NCMEC and from them to law enforcement.

When other companies do that on the cloud, some users somehow feel like they've consented to it like it's on the company's servers now, so it's not really theirs anymore, and so it's ok. Even if it makes what the user stores there profoundly less private as a result. But when even that one small hash matching component is done on the user's own device, some users don't feel like they've given the same implicit consent, and to them, that makes it not ok even if it's Apple believes it's more private.

How will Apple be matching images already in iCloud Photo Library?

Unclear, though Apple says they will be matching them. Since Apple seems unwilling to scan online libraries, it's possible they'll simply do the on-device matching over time as images are moved back and forth between devices and iCloud.

Why can't Apple implement the same hash matching process on iCloud and not involve our devices at all?

According to Apple, they don't want to know about non-matching images at all. So, handling that part on-device means iCloud Photo Library only ever knows about the matching images, and only vaguely due to synthetic matches, unless and until the threshold is met and they're able to decrypt the vouchers.

If they were to do the matching entirely on iCloud, they'd have knowledge of all the non-matches as well.

So… let's say you have a bunch of red and blue blocks. If you drop all the blocks, red and blue, off at the police station and let the police sort them, they know all about all your blocks, red and blue.

But, if you sort the red blocks from the blue and then drop off only the blue blocks at the local police station, the police only know about the blue blocks. They know nothing about the red.

And, in this example, it's even more complicated because some of the blue blocks are synthetic, so the police don't know the true number of blue blocks, and the blocks represent things the police can't understand unless and until they get enough of the blocks.

But, some people do not care about this distinction, like at all, or even prefer or are happily willing to trade getting the database and matching off their devices, letting the police sort all the blocks their damn selves, then feel the sense of violation that comes with having to sort the blocks themselves for the police.

It feels like a preemptive search of a private home by a storage company, instead of the storage company searching their own warehouse, including whatever anyone knowingly chose to store there.

It feels like the metal detectors are being taken out of a single stadium and put into every fan's side doors because the sports ball club doesn't want to make you walk through them on their premises.

All that to explain why some people are having such visceral reactions to this.

Isn't there any way to do this without putting anything on device?

I'm as far from a privacy engineer as you can get, but I'd like to see Apple take a page from Private Relay and process the first part of the encryption, the header, on a separate server from the second, the voucher, so no on-device component is needed, and Apple still wouldn't have perfect knowledge of the matches.

Something like that, or smarter, is what I'd personally love to see Apple explore.

Can you turn off or disable CSAM detection?

Yes, but you have to turn off and stop using iCloud Photo Library to do it. That's implicitly stated in the white papers, but Apple said it explicitly in the press briefings.

Because the on-device database is intentionally blinded, the system requires the secret key on iCloud to complete the hash matching process, so without iCloud Photo Library, it's literally non-functional.

Can you turn off iCloud Photo Library and use something like Google Photos or Dropbox instead?

Sure, but Google, Dropbox, Microsoft, Facebook, Imagr, and pretty much every major tech company has been doing full-on server-side CSAM scanning for up to a decade or more already.

If that doesn't bother you as much or at all, you can certainly make the switch.

So, what can someone who is a privacy absolutist do?

Turn off iCloud Photo Library. That's it. If you still want to back up, you can still back up directly to your Mac or PC, including an encrypted backup, and then just manage it as any local backup.

What happens if grandpa or grandma take a photo of grandbaby in the bath?

Nothing. Apple is only looking for matches to the known, existing CSAM images in the database. They continue to want zero knowledge when it comes to your own, personal, novel images.

So Apple can't see the images on my device?

No. They're not scanning the images at the pixel level at all, not using content detection or computer vision or machine learning or anything like that. They're matching the mathematical hashes, and those hashes can't be reverse-engineered back to the images or even opened by Apple unless they match known CSAM in sufficient numbers to pass the threshold required for decryption.

Then this does nothing to prevent the generation of new CSAM images?

On-device, in real-time, no. New CSAM images would have to go through NCMEC or a similar child safety organization and be added to the hash database that's provided to Apple, and Apple would then have to replay it as an update to iOS and iPadOS.

The current system only works to prevent known CSAM images from being stored or trafficked through iCloud Photo Library.

So, yes, as much as some privacy advocates will think Apple has gone too far, there are likely some child safety advocates who will think Apple still hasn't gone far enough.

Apple kicks legitimate apps out of the App Store and allows in scams all the time; what's to guarantee they'll do any better at CSAM detection, where the consequences of a false positive are far, far more harmful?

They're different problem spaces. The App Store is similar to YouTube in that you have unbelievably massive amounts of highly diversified user-generated content being uploaded at any given time. They do use a combination of automated and manual, machine and human review, but they still falsely reject legitimate content and allow on scam content. Because the tighter they tune, the more false positives and the loser they tune, the more scams. So, they're constantly adjusting to stay as close as they can to the middle, knowing that at their scale, there'll always be some mistakes made on both sides.

With CSAM, because it's a known target database that's being matched against, it vastly reduces the chances for error. Because it requires multiple matches to reach the threshold, it further reduces the chance for error. Because, even after the multiple match threshold is met, it still requires human review, and because checking a hash match and visual derivative is much less complex than checking a whole entire app — or video — it further reduces the chance for error.

This is why Apple is sticking with their one in a trillion accounts per year false matching rate, at least for now. Which they would never, not ever do for App Review.

If Apple can detect CSAM, can't they use the same system to detect anything and everything else?

This is going to be another complicated, nuanced discussion. So, I'm just going to say up front that anyone who says people who care about child exploitation don't care about privacy, or anyone who says people who care about privacy are a screeching minority that doesn't care about child exploitation, are just beyond disingenuous, disrespectful, and… gross. Don't be those people.

So, could the CSAM system be used to detect images of drugs or unannounced products or copyrighted photos or hate speech memes or historical demonstrations, or pro-democracy flyers?

The truth is, Apple can theoretically do anything on iOS they want, any time they want, but that's no more or less true today with this system in place than it was a week ago before we knew it existed. That includes the much, much easier implementation of just doing actual full image scans of our iCloud Photo Libraries. Again, like most other companies do.

Apple created this very narrow, multi-layer, frankly kinda all shades of slow and inconvenient for everybody but the user involved, system to, in their minds, retain as much privacy and prevent as much abuse as possible.

It requires Apple to set up, process, and deploy a database of known images, and only detects a collection of those images on upload that pass the threshold, and then still requires manual review inside Apple to confirm matches.

That's… non-practical for most other uses. Not all, but most. And those other uses would still require Apple to agree to expanding the database or databases or lowering the threshold, which is also no more or less likely than requiring Apple to agree to those full image scans on iCloud Libraries to begin with.

There could be an element of boiling the water, though, where introducing the system now to detect CSAM, which is tough to object to, will make it easier to slip in more detection schemes in the future, like terrorist radicalization material, which is also tough to object to, and then increasingly less and less universally reviled material, until there's no-one and nothing left to object to.

And, regardless of how you feel about CSAM detection in specific, that kind of creep is something that will always require all of us to be ever more vigilant and vocal about it.

What's to stop someone from hacking additional, non-CSAM images into the database?

If a hacker, state-sponsored or otherwise, was to somehow infiltrate NCMEC or one of the other child safety organization, or Apple, and inject non-CSAM images into the database to create collisions, false positives, or to detect for other images, ultimately, any matches would end up at the manual human review at Apple and be rejected for not being an actual match for CSAM.

And that would trigger an internal investigation to determine if there was a bug or some other problem in the system or with the hash database provider.

But in either case… any case, what it wouldn't do is trigger a report from Apple to NCMEC or from them to any law enforcement agency.

That's not to say it would be impossible, or that Apple considers it impossible and isn't always working on more and better safeguard, but their stated goal with the system is to make sure people aren't storing CSAM on their servers and to avoid any knowledge of any non-CSAM images anywhere.

What's to stop another government or agency from demanding Apple increase the scope of detection beyond CSAM?

Part of the protections around overt government demands are similar to the protections against covert individual hacks of non-CSAM images in the system.

Also, while the CSAM system is currently U.S.-only, Apple says that it has no concept of regionalization or individualization. So, theoretically, as currently implemented, if another government wanted to add non-CSAM image hashes to the database, first, Apple would just refuse, same as they would do if a government demanded full image scans of iCloud Photo Library or exfiltration of computer vision-based search indexes from the Photos app.

Same as they have when governments have previously demanded back doors into iOS for data retrieval. Including the refusal to comply with extra-legal requests and the willingness to fight what they consider to be that kind of government pressure and overreach.

But we'll only ever know and see that for sure on a court case by case basis.

Also, any non-CSAM image hashes would match not just in the country that demanded they be added but globally, which could and would raise alarm bells in other countries.

Doesn't the mere fact of this system now existing signal that Apple now has the capability and thus embolden governments to make those kinds of demands, either with public pressure or under legal secrecy?

Yes, and Apple seems to know and understand that… the perception is reality function here may well result in increased pressure from some governments. Including and especially the government already exerting just exactly that kind of pressure, so far so ineffectively.

But what if Apple does cave? Because the on-device database is unreadable, how would we even know?

Given Apple's history with data repatriation to local servers in China, or Russian borders in Maps and Taiwan flags in emoji, even Siri utterances being quality assured without explicit consent, what happens if Apple does get pressured into adding to the database or adding more databases?

Because iOS and iPadOS are single operating systems deployed globally, and because Apple is so popular, and therefore — equal and opposite reaction — under such intense scrutiny from… everyone from papers of record to code divers, the hope is it would be discovered or leaked, like the data repatriation, borders, flags, and Siri utterances. Or signaled by the removal or modification of text like "Apple has never been asked nor required to extend CSAM detection."

And given the severity of potential harm, with equal severity of consequences.

What happened to Apple saying privacy is a Human Right?

Apple still believes privacy is a human right. Where they've evolved over the years, back and forth, is in how absolute or pragmatic they've been about it.

Steve Jobs, even back in the day, said privacy is about informed consent. You ask the user. You ask them again and again. You ask them until they tell you to stop asking them.

But privacy is, in part, based on security, and security is always at war with convince.

I've learned this, personally, the hard way over the years. My big revelation came when I was covering data backup day, and I asked a popular backup utility developer how to encrypt my backups. And he told me to never, not ever do that.

Which is… pretty much the opposite of what I'd heard from the very absolutist infosec people I'd been speaking to before. But the dev very patiently explained that for most people, the biggest threat wasn't having their data stolen. It was losing access to their data. Forgetting a password or damaging a drive. Because an encrypted drive can't ever, not ever be recovered. Bye-bye wedding pictures, bye baby pictures, bye everything.

So, every person has to decide for themselves which data they'd rather risk being stolen than lose and which data they'd rather risk losing than being stolen. Everyone has the right to decide that for themselves. And anyone who yells otherwise that full encryption or no encryption is the only way is… a callous, myopic asshole.

Apple learned the same lesson around iOS 7 and iOS 8. The first version of 2-step authentication they rolled out required users to print and keep a long alphanumeric recovery key. Without it, if they forgot their iCloud password, they'd lose their data forever.

And Apple quickly learned just how many people forget their iCloud passwords and how they feel when they lose access to their data, their wedding and baby pictures, forever.

So, Apple created the new 2-factor authentication, which got rid of the recovery key and replaced it with an on-device token. But because Apple could store the keys, they could also put a process in place to recover accounts. A strict, slow, sometimes frustrating process. But one that vastly reduced the amount of data loss. Even if it slightly increased the chances of data being stolen or seized because it left the backups open to legal demands.

The same thing happened with health data. In the beginning, Apple locked it down more strictly than they'd ever locked anything down before. They didn't even let it sync over iCloud. And, for the vast majority of people, that was super annoying, really an inconvenience. They'd change devices and lose access to it, or if they were medically incapable of managing their own health data, they'd be unable to benefit from it partially or entirely.

So, Apple created a secure way to sync health data over iCloud and has been adding features to let people share medical information with health care professionals and, most recently, with family members.

And this applies to a lot of features. Notifications and Siri on the Lock Screen can let people shoulder surf or access some of your private data, but turning them off makes your iPhone or iPad way less convenient.

And XProtect, which Apple uses to scan for known malware signatures on-device, because the consequences of infection, they believe, warrants the intervention.

And FairPlay DRM, which Apple uses to verify playback against their servers, and apoplectically, prevent screenshots of copy-protected videos on our own personal devices. Which, because they want to deal with Hollywood, they believe warrants the intervention.

Now, obviously, for a wide variety of reasons, CSAM detection is completely different in kind. Most especially because of the reporting mechanism that will, if the match threshold is met, alert Apple as to what's on our phones. But, because Apple is no longer willing to abide CSAM on their servers and won't do full iCloud Photo Library scans, they believe it warrants the partially on-device intervention.

Will Apple be making CSAM detection available to third-party apps?

Unclear. Apple has only talked about potentially making the explicit photo blurring in Communication Safety available to third-party apps at some point, not CSAM detection.

Because other online storage providers already scan libraries for CSAM, and because the human review process is internal to Apple, the current implementation seems less than ideal for third parties.

Is Apple being forced to do CSAM detection by the government?

I've not seen anything to indicate that. There are new laws being tabled in the EU, the UK, Canada, and other places that put much higher burdens and penalties on platform companies, but the CSAM detection system isn't being rolled out in any of those places yet. Just the U.S., at least for now.

Is Apple doing CSAM detection to reduce the likelihood anti-encryption laws will pass?

Governments like the U.S., India, and Australia, among others, have been talking about breaking encryption or requiring back doors for many years already. And CSAM and terrorism are often the most prominent reasons cited in those arguments. But the current system only detects CSAM and only in the U.S., and I've not heard anything to indicate this applies to that either.

Has there been a huge exposé in the media to prompt Apple doing CSAM detection, like the ones that prompted Screen Time?

There have been some, but nothing I'm aware of that's both recent, and that specifically and publicly targeted Apple.

So is CSAM Detection just a precursor to Apple enabling full end-to-end encryption of iCloud backups?

Unclear. There've been rumors about Apple enabling that as an option for years. One report said the FBI asked Apple not to enable encrypted backups because it would interfere with law enforcement investigations, but my understanding is that the real reason was that there were such a vast number of people locking themselves out of their accounts and losing their data that it convinced Apple not to go through with it for backups, at least at the time.

But now, with new systems like Recovery Contacts coming to iOS 14, that would conceivably mitigate against account lockout and allow for full, end-to-end encryption.

How do we let Apple know what we think?

Go to apple.com/feedback, file with bug reporter, or write an email or good, old-fashioned letter to Tim Cook. Unlike War-games, with this type of stuff, the only way to lose is not to play.

from iMore - Learn more. Be more.

via TechnW3

No comments: